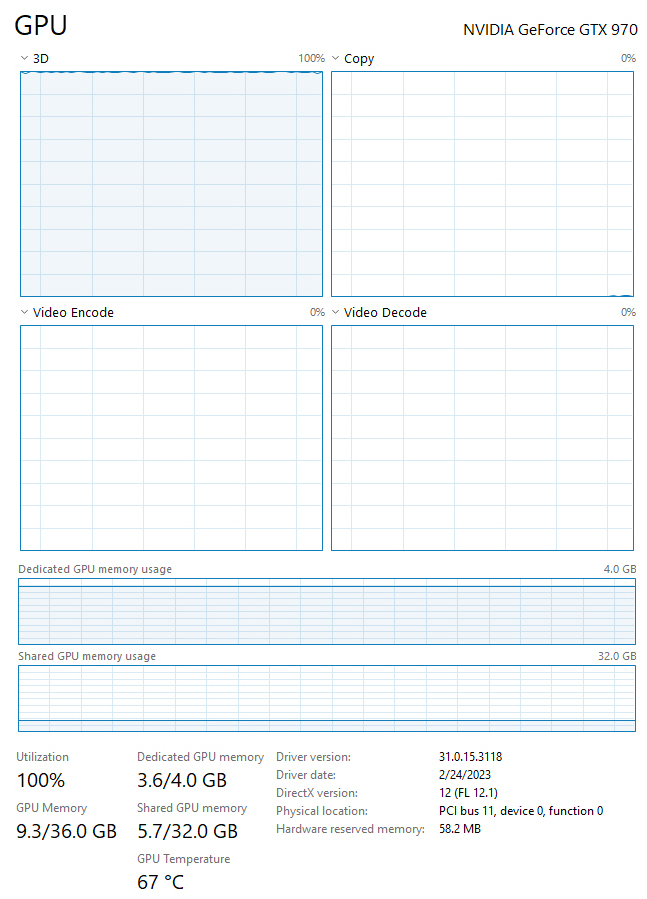

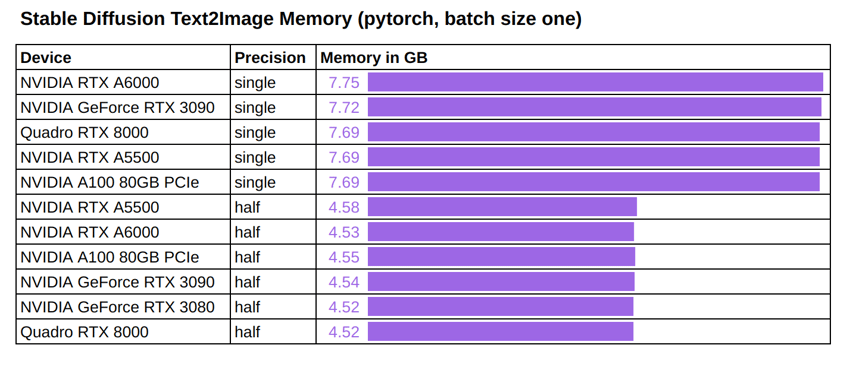

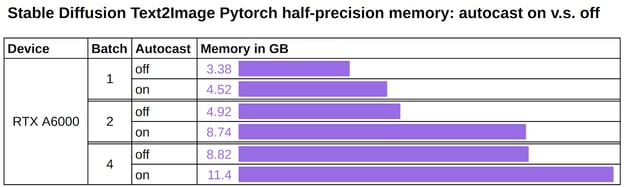

Get Huge SDXL Inference Speed Boost With Disabling Shared VRAM — Tested With 8 GB VRAM GPU - DEV Community

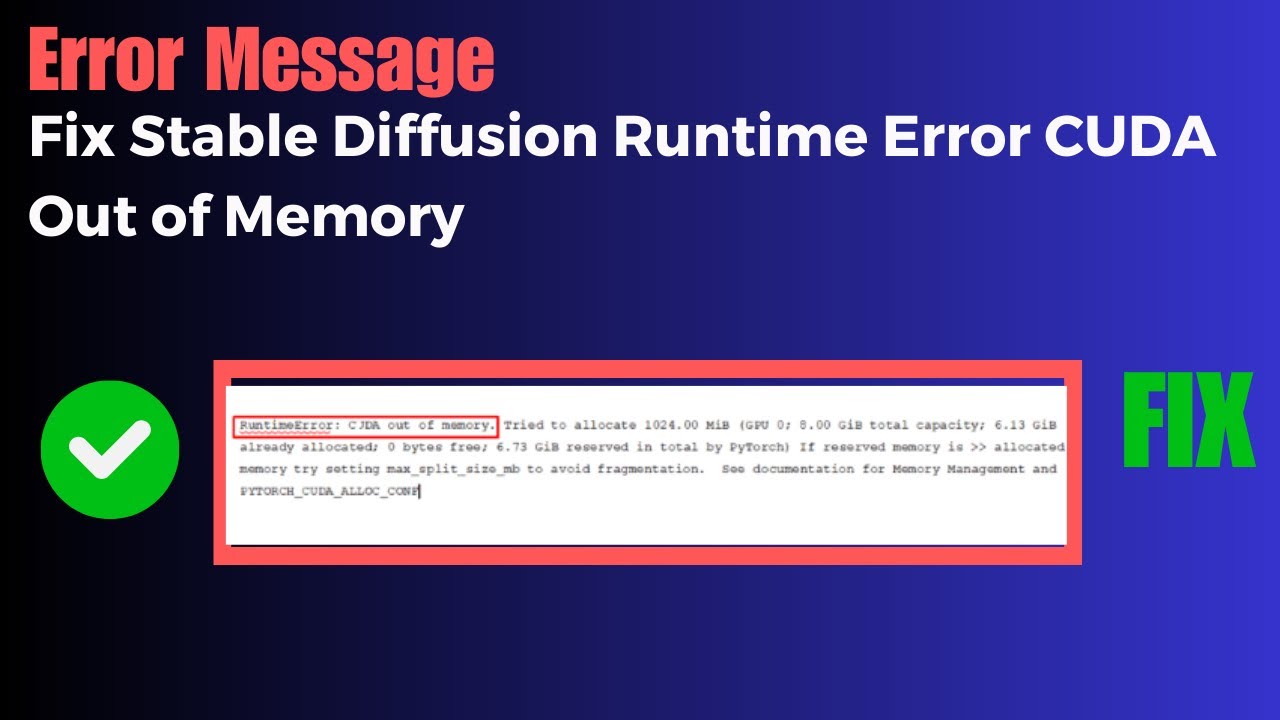

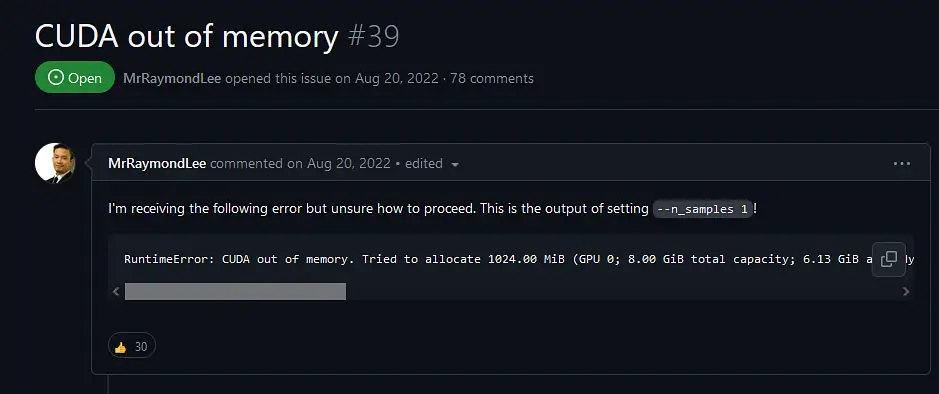

Could not allocate tensor with 377487360 bytes. There is not enough GPU video memory available! · Issue #38 · lshqqytiger/stable-diffusion-webui-directml · GitHub

Why does stable diffusion hold onto my vram even when it's doing nothing. It works great for a few images and then it racks up so much vram usage it just won't

Why does stable diffusion hold onto my vram even when it's doing nothing. It works great for a few images and then it racks up so much vram usage it just won't

Furkan Gözükara on X: "Get Huge SDXL Inference Speed Boost With Disabling Shared VRAM — Tested With 8 GB VRAM GPU System Memory Fallback for Stable Diffusion https://t.co/bnTnJLS1Iz" / X