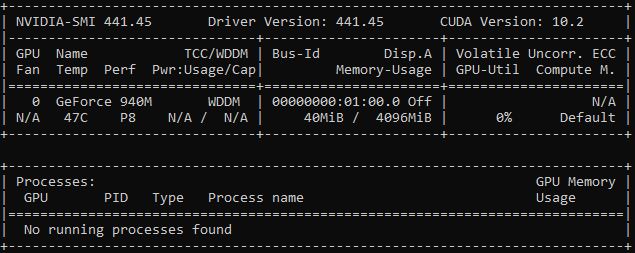

![gpu memory not released after run `sudo kill [pytorch process id]` · Issue #5736 · pytorch/pytorch · GitHub gpu memory not released after run `sudo kill [pytorch process id]` · Issue #5736 · pytorch/pytorch · GitHub](https://user-images.githubusercontent.com/16065878/37334880-114926d6-26e8-11e8-97b5-0da1493e3ca5.png)

gpu memory not released after run `sudo kill [pytorch process id]` · Issue #5736 · pytorch/pytorch · GitHub

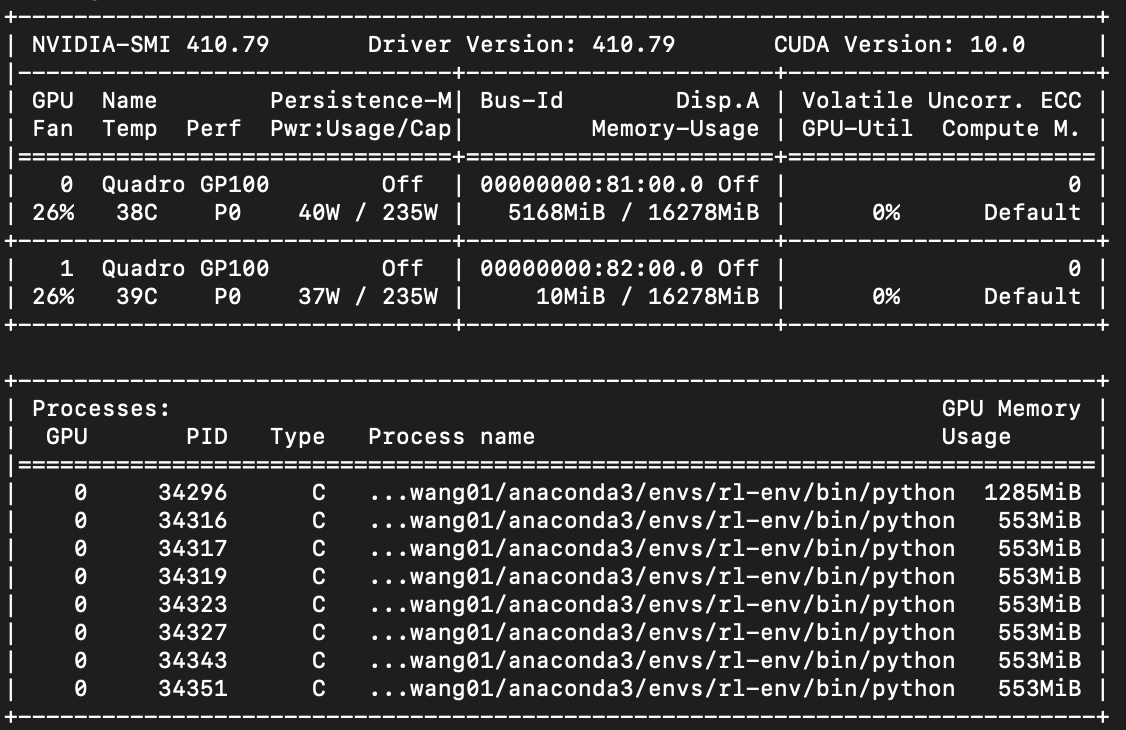

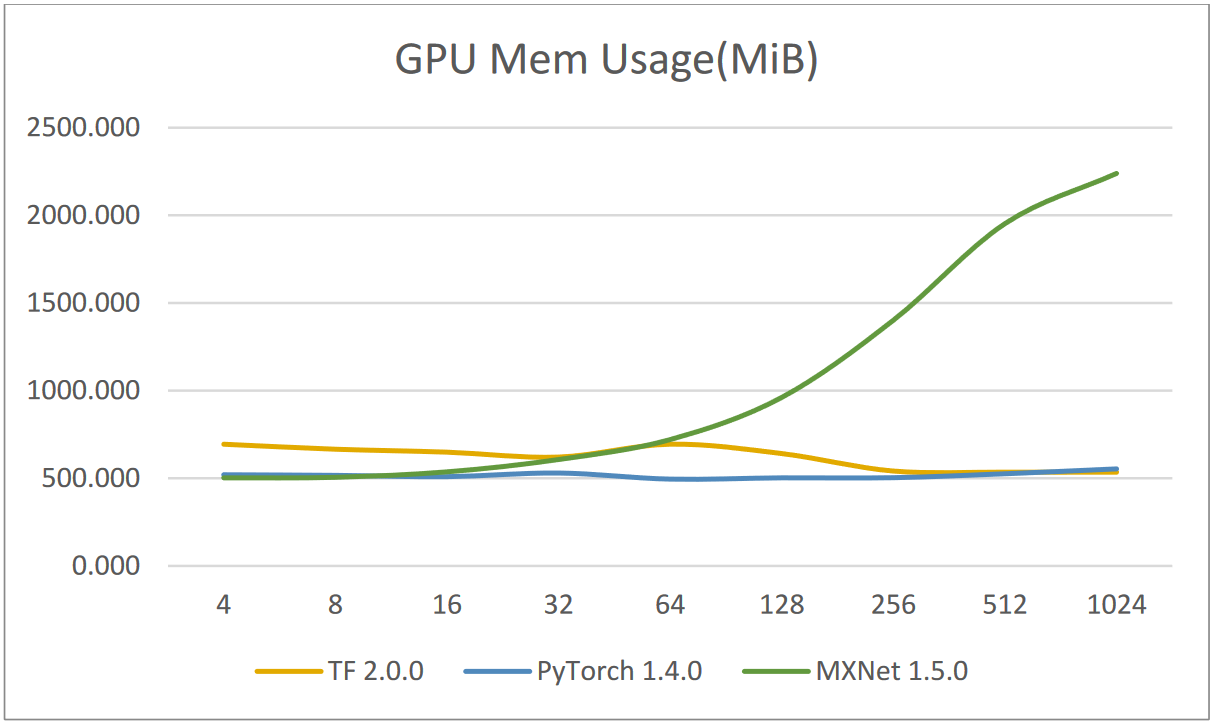

pytorch - Why tensorflow GPU memory usage decreasing when I increasing the batch size? - Stack Overflow

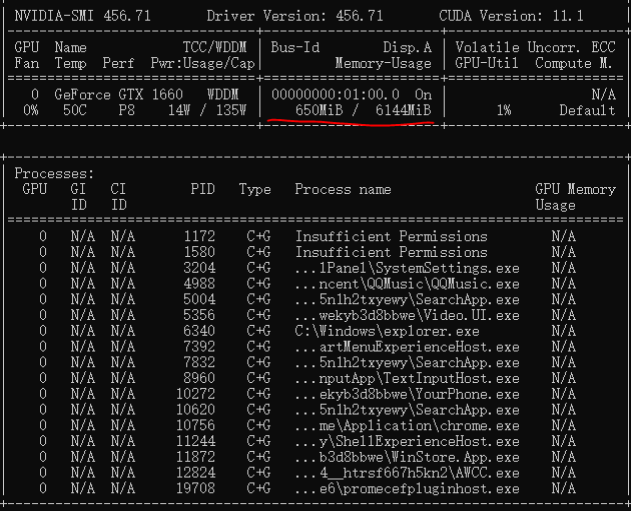

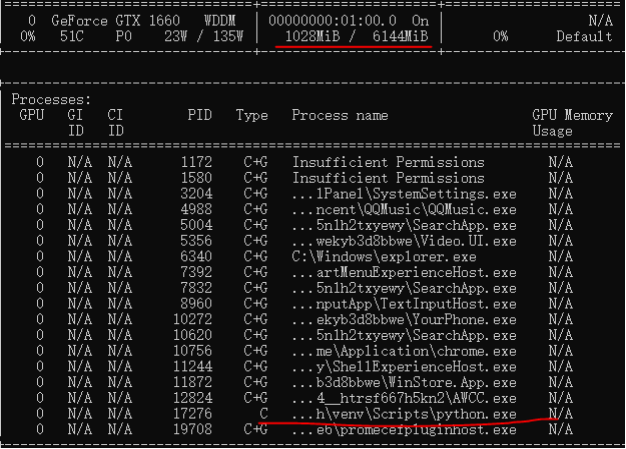

GPU Memory not being freed using PT 2.0, issue absent in earlier PT versions · Issue #99835 · pytorch/pytorch · GitHub

Release the GPU memory after backward() · Issue #171 · KevinMusgrave/pytorch-metric-learning · GitHub

deep learning - Pytorch: How to know if GPU memory being utilised is actually needed or is there a memory leak - Stack Overflow

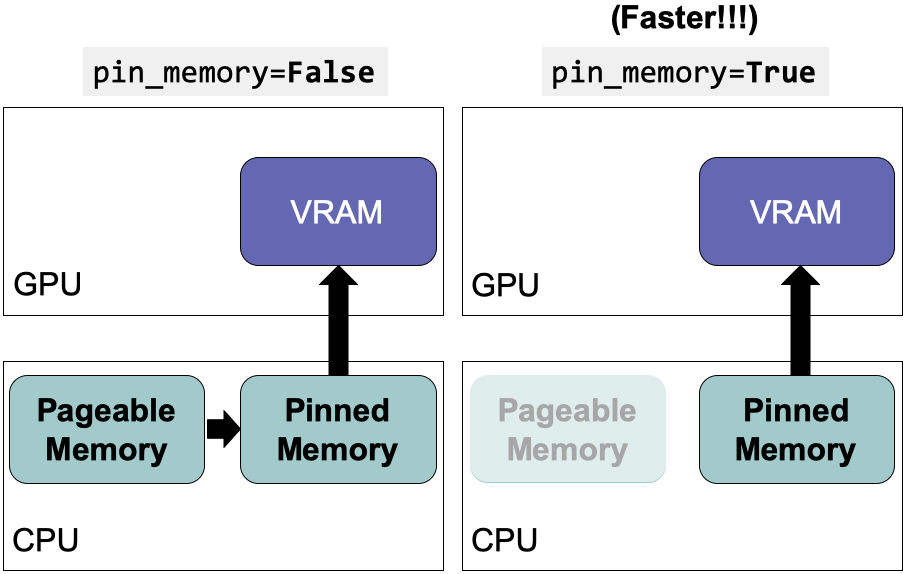

Optimize PyTorch Performance for Speed and Memory Efficiency (2022) | by Jack Chih-Hsu Lin | Towards Data Science

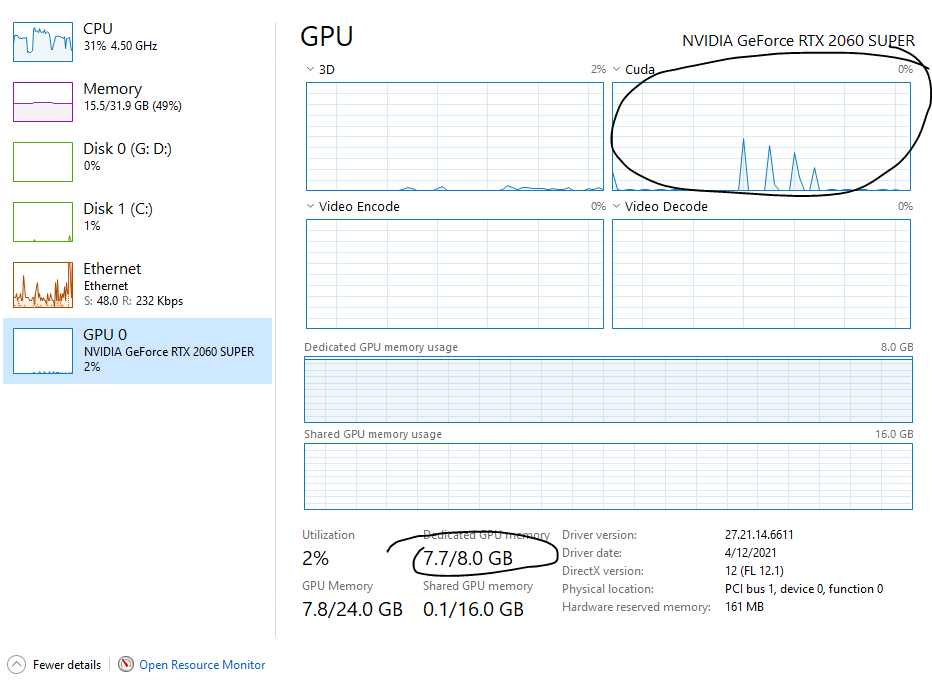

python - How can I decrease Dedicated GPU memory usage and use Shared GPU memory for CUDA and Pytorch - Stack Overflow