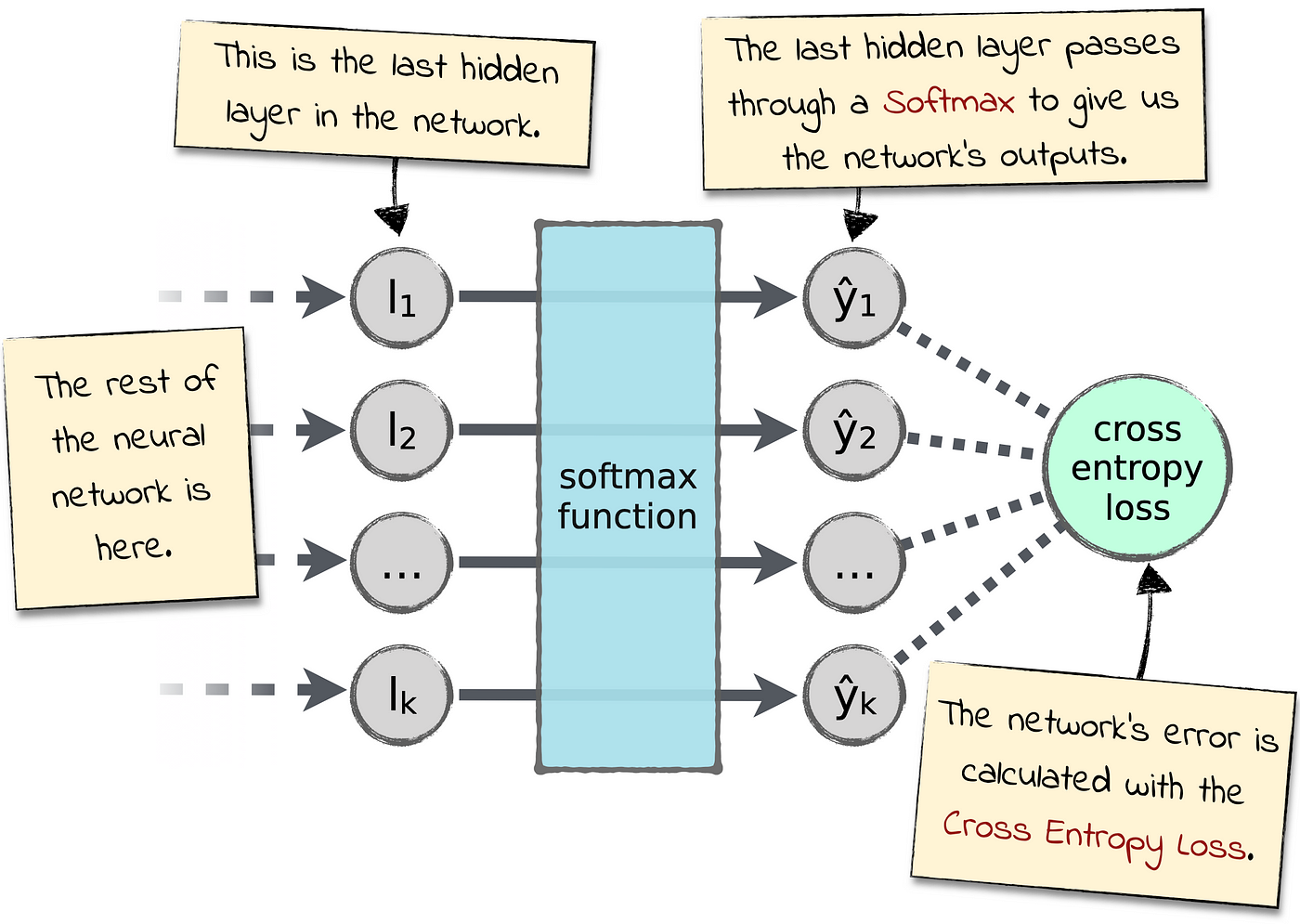

Why Softmax not used when Cross-entropy-loss is used as loss function during Neural Network training in PyTorch? | by Shakti Wadekar | Medium

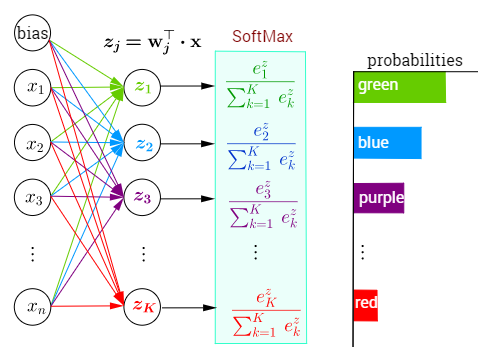

The structure of neural network in which softmax is used as activation... | Download Scientific Diagram

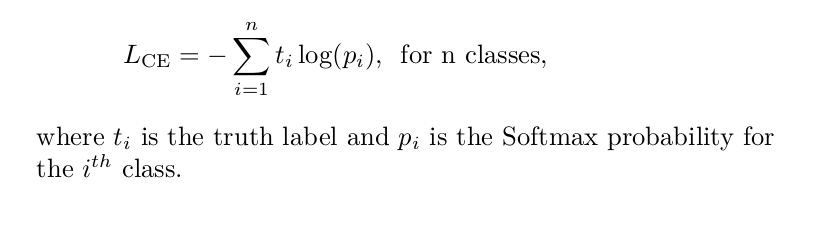

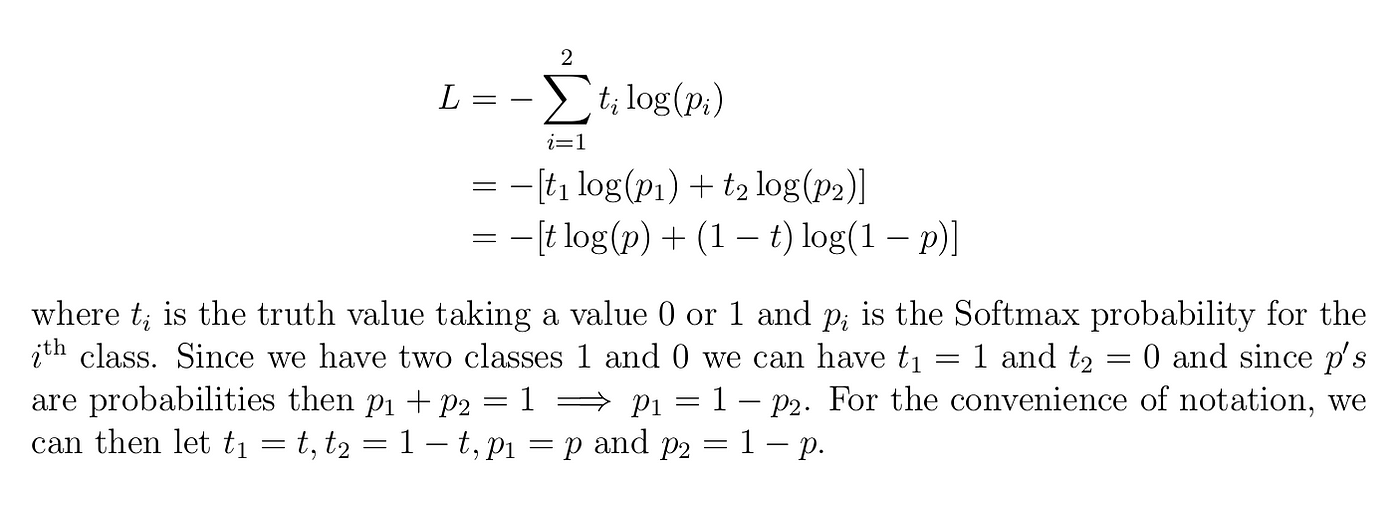

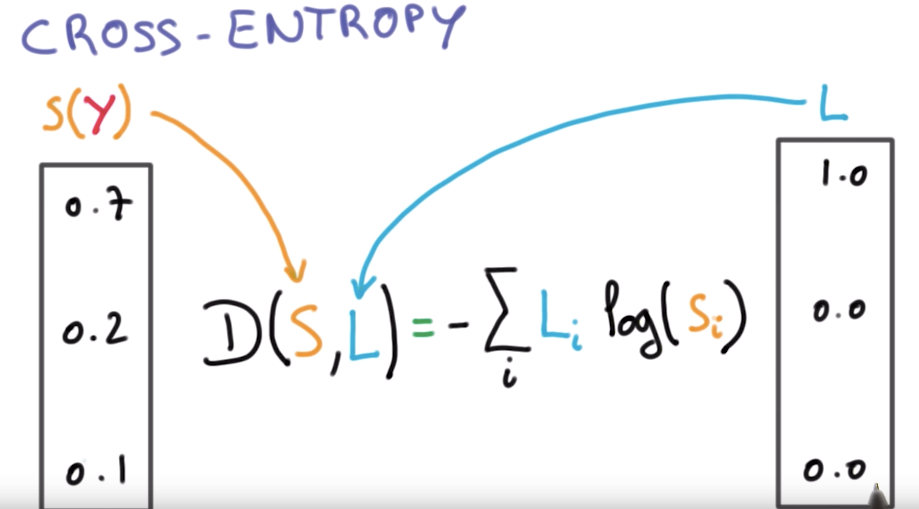

Cross-Entropy Loss Function. A loss function used in most… | by Kiprono Elijah Koech | Towards Data Science

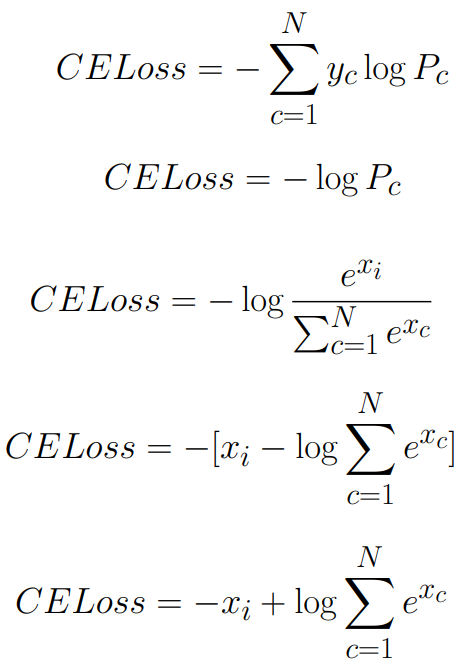

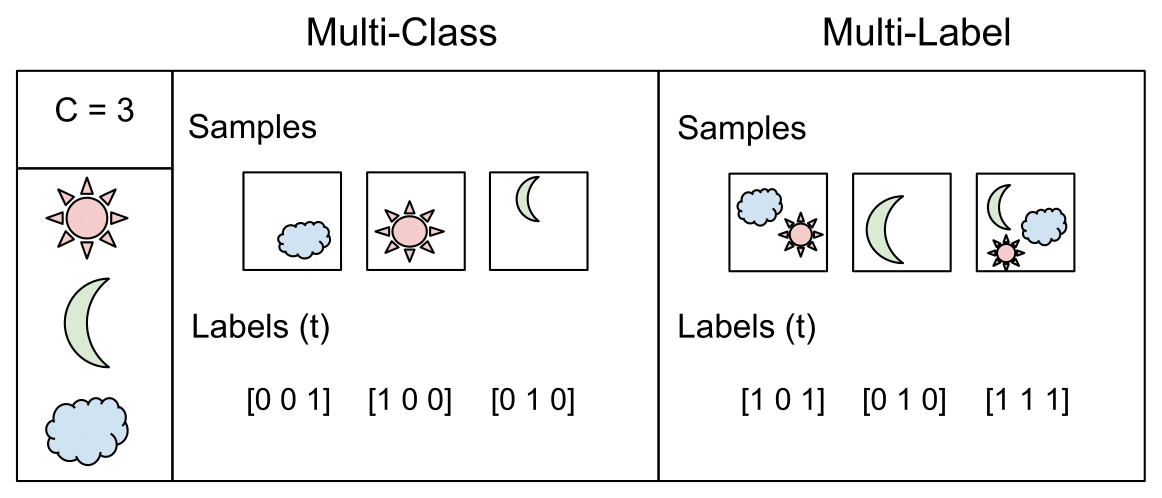

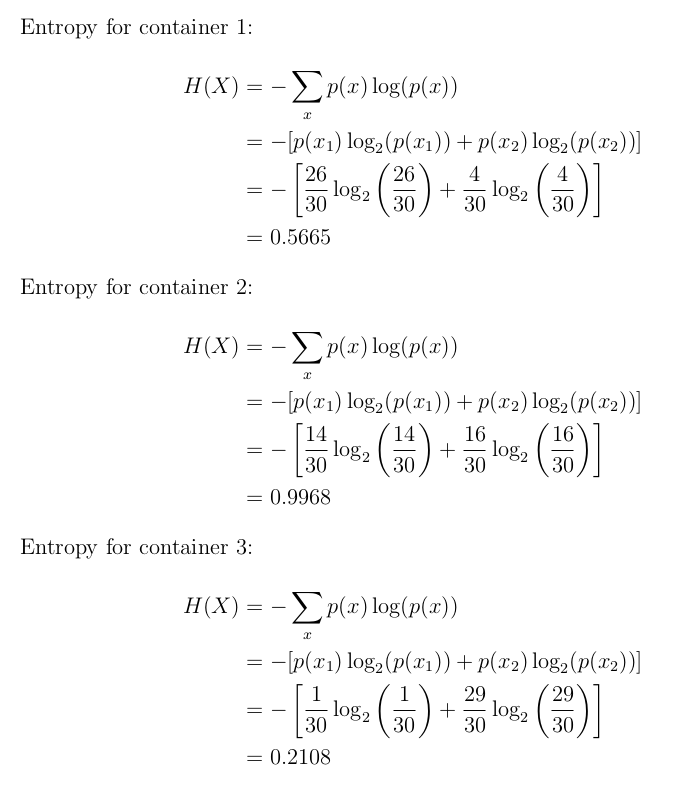

Understanding Categorical Cross-Entropy Loss, Binary Cross-Entropy Loss, Softmax Loss, Logistic Loss, Focal Loss and all those confusing names

Cross-Entropy Loss Function. A loss function used in most… | by Kiprono Elijah Koech | Towards Data Science

Cross-Entropy Loss Function. A loss function used in most… | by Kiprono Elijah Koech | Towards Data Science

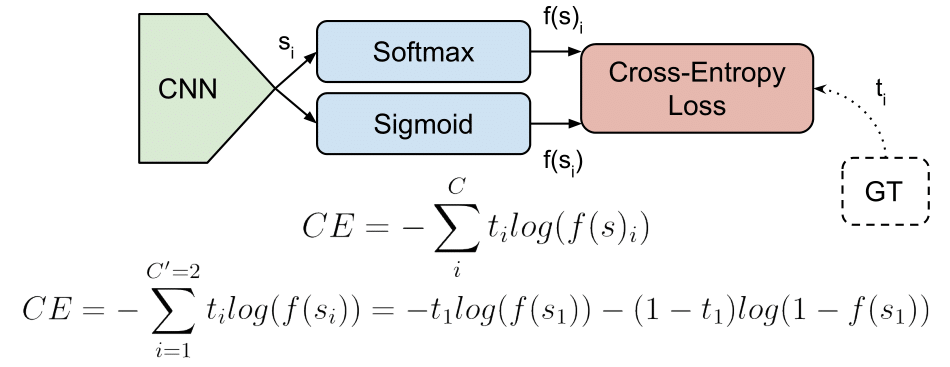

Understanding Categorical Cross-Entropy Loss, Binary Cross-Entropy Loss, Softmax Loss, Logistic Loss, Focal Loss and all those confusing names

Softmax Cross Entropy Loss with Unbiased Decision Boundary for Image Classification | Semantic Scholar

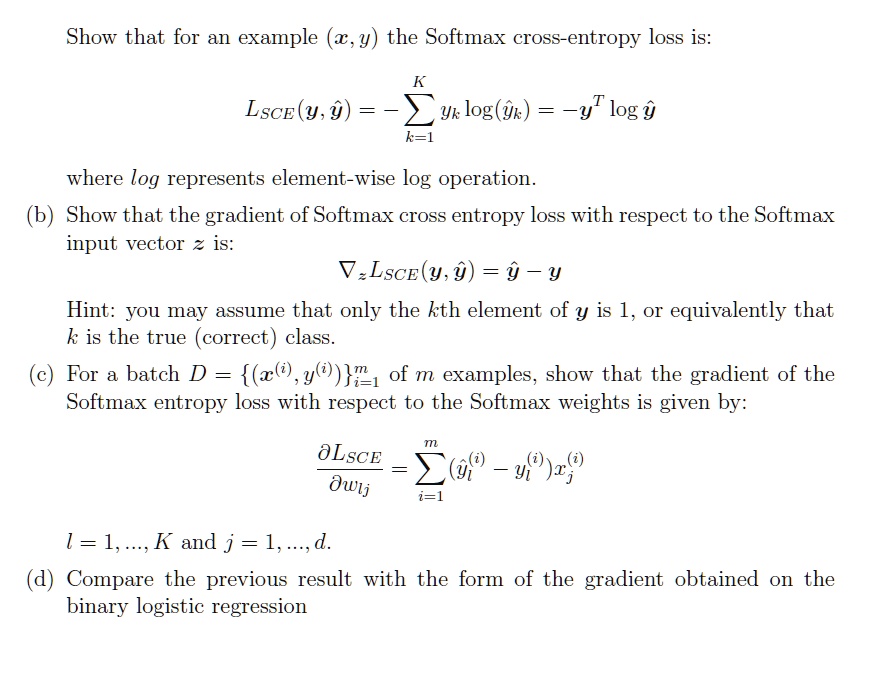

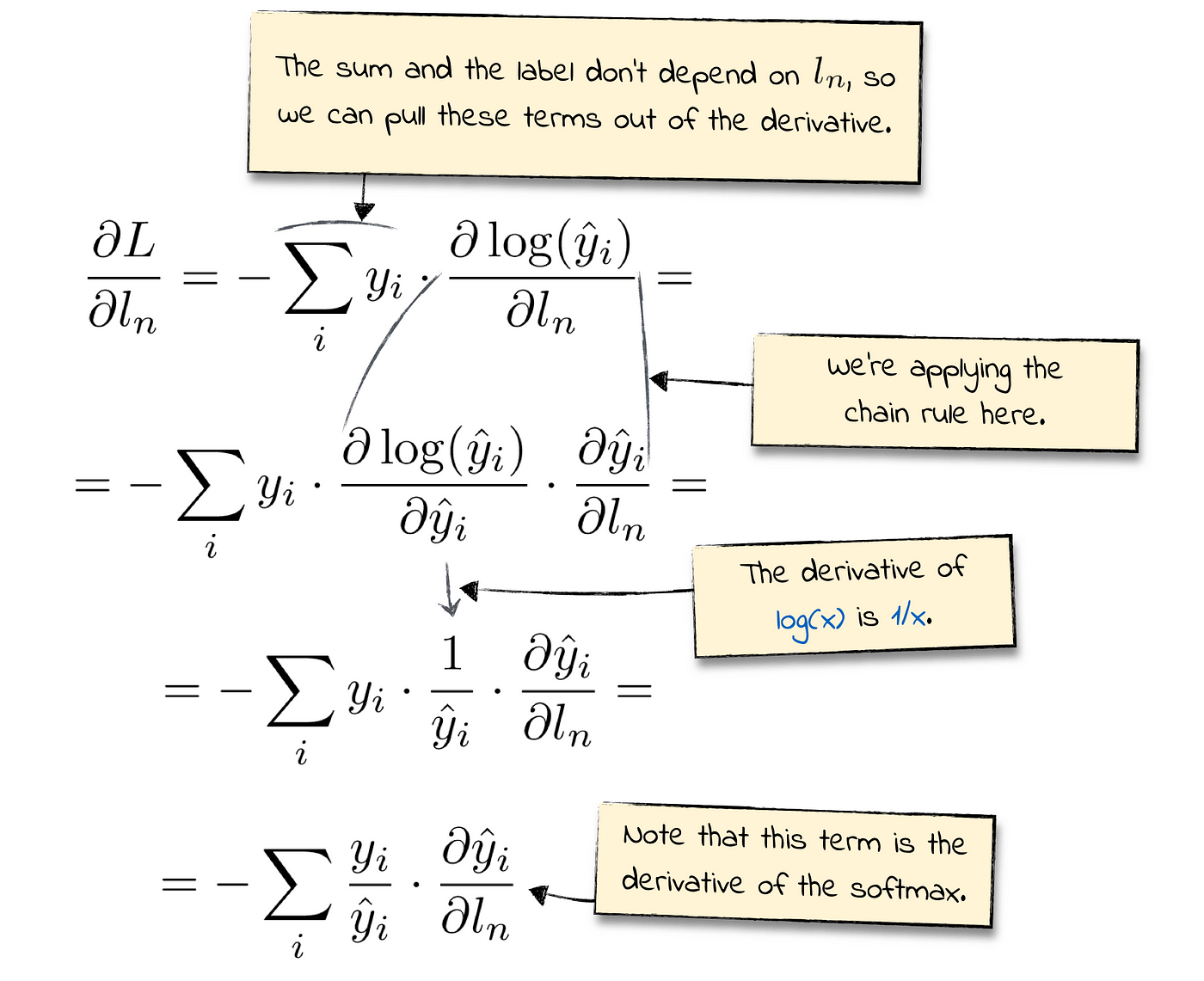

SOLVED: Show that for an example (€,y), the Softmax cross-entropy loss is: LscE(y,k) = - Yk log(yk) ≈ -yt log yk where log represents element-wise log operation. Show that the gradient of

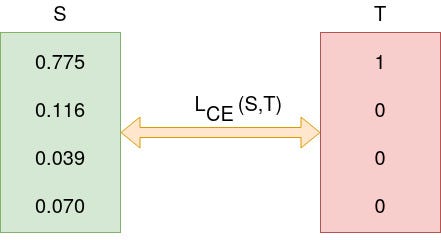

Cross-Entropy Loss Function. A loss function used in most… | by Kiprono Elijah Koech | Towards Data Science

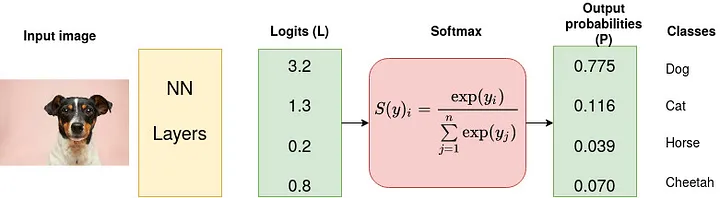

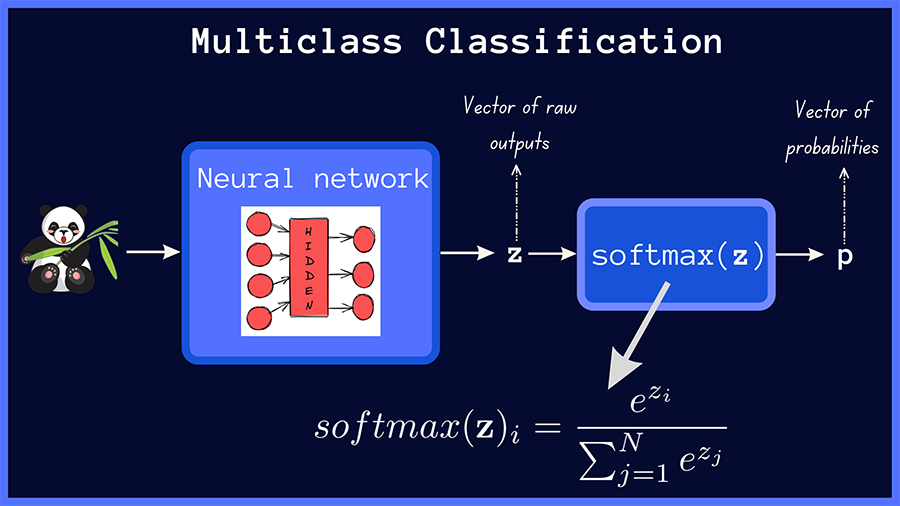

Understanding Logits, Sigmoid, Softmax, and Cross-Entropy Loss in Deep Learning | Written-Reports – Weights & Biases

![DL] Categorial cross-entropy loss (softmax loss) for multi-class classification - YouTube DL] Categorial cross-entropy loss (softmax loss) for multi-class classification - YouTube](https://i.ytimg.com/vi/ILmANxT-12I/hq720.jpg?sqp=-oaymwEhCK4FEIIDSFryq4qpAxMIARUAAAAAGAElAADIQj0AgKJD&rs=AOn4CLDP2Mcvs9IEnETkFGUgaLNZ2t-iGg)